PhotoRag: Advanced Retrieval-Augmented Generation with Colpali and OpenAI.

The PhotoRag system combines advanced image and document retrieval capabilities with natural language processing (NLP). By utilizing the ColQwen2 model for image embeddings and GPT-4o for enhanced image understanding, PhotoRag offers a robust solution for various use cases such as image indexing, querying, and document handling.

The model used for this project

- vidore/colqwen2-v0.1(ColPali) : Visual Retriever

- OpenAI (gpt4o): Text Generation based on Images

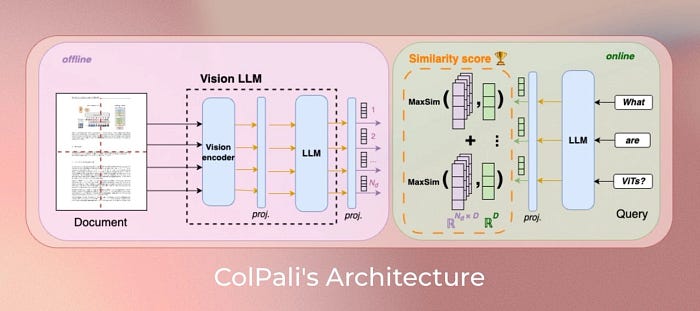

What is ColPali?

ColPali is an innovative document retrieval model that uses Vision Language Models (VLMs) to quickly and efficiently find information in documents just by looking at their visual features. This model was created by researchers including Manuel Faysse, Hugues Sibille, and Tony Wu, and it’s a big step forward in understanding and retrieving documents through text and visuals.

Here’s what makes ColPali stand out:

- It indexes and retrieves documents based only on their appearance (no need for extra text processing).

- It performs well at finding the right information in documents.

- It can handle different types of documents, like those with text, tables, or images.

- The whole system can be trained from start to finish without needing a complex setup.

- When you search, it responds quickly.

Why is ColPali useful?

Traditional document retrieval systems have a lot of challenges, like:

- They need complicated systems to process PDFs.

- They rely on Optical Character Recognition (OCR) to read text.

- They struggle with visual elements like tables and images.

- They can take a long time to index documents.

ColPali solves these problems by working directly with the images of documents. This means no need for extra steps like OCR or layout detection, and it makes the retrieval process faster and more accurate.

You can check this model: https://huggingface.co/vidore/colqwen2-v0.1

let’s see how we can build this PhotoRag

Part 1: here are some requirements that we are going to use

!pip install git+https://github.com/illuin-tech/colpali --q

!pip install pdf2image --q

!apt-get install poppler-utils -y import torch

import sqlite3

from pdf2image import convert_from_path

from colpali_engine.models import ColQwen2, ColQwen2Processor Part 2: Here we are Loading the model and processor

model = ColQwen2.from_pretrained(

"vidore/colqwen2-v0.1",

torch_dtype=torch.bfloat16,

device_map=device_map # Use "mps" if on Apple Silicon; otherwise, use "cpu" or "cuda"

)

processor = ColQwen2Processor.from_pretrained("vidore/colqwen2-v0.1")Part 3: we are using SQLite as a Vector Store

Adding Images to Vector Store

- Generates ColQwen embeddings

- Checks for duplicate data

- Stores in SQLite

Database Schema

CREATE TABLE embeddings (

id INTEGER PRIMARY KEY AUTOINCREMENT,

image_base64 TEXT,

image_hash TEXT UNIQUE,

embedding BLOB

)# Function to get a database connection

def get_db_connection():

conn = sqlite3.connect('image_embeddings.db')

return conn

Part 4: Function to process image data and store it as an index

def process_and_index_image(image, img_str, image_hash, processor, model):

# Store in database

conn = get_db_connection()

c = conn.cursor()

c.execute('''

CREATE TABLE IF NOT EXISTS embeddings (

id INTEGER PRIMARY KEY AUTOINCREMENT,

image_base64 TEXT,

image_hash TEXT UNIQUE,

embedding BLOB

)

''')

# Check if the image hash already exists

c.execute('SELECT id FROM embeddings WHERE image_hash = ?', (image_hash,))

result = c.fetchone()

if result:

# Image already indexed

conn.close()

return

# Process image to get embedding

batch_images = processor.process_images([image]).to(model.device)

with torch.no_grad():

image_embeddings = model(**batch_images)

image_embedding = image_embeddings[0].cpu().to(torch.float32).numpy()

# Serialize the embedding

embedding_bytes = pickle.dumps(image_embedding)

c.execute('INSERT INTO embeddings (image_base64, image_hash, embedding) VALUES (?, ?, ?)', (img_str, image_hash, embedding_bytes))

conn.commit()

conn.close()

print("Your Data is successfully processed")Part 5: function to retrieve related images and generate the response based on that image

here we are using gpt-4o(OpenAI) model for response generation

def get_response(query):

try:

# Process the query

with torch.no_grad():

batch_query = processor.process_queries([query]).to(model.device)

query_embedding = model(**batch_query)

query_embedding_cpu = query_embedding.cpu().to(torch.float32).numpy()[0]

# Retrieve image embeddings from the database

conn = get_db_connection()

c = conn.cursor()

c.execute('SELECT image_base64, embedding FROM embeddings')

rows = c.fetchall()

conn.close()

if not rows:

return "No images found in the index. Please add images first."

# Set a fixed sequence length

fixed_seq_len = 620 # Adjust based on your embeddings

image_embeddings_list = []

image_base64_list = []

for row in rows:

image_base64, embedding_bytes = row

embedding = pickle.loads(embedding_bytes)

seq_len, embedding_dim = embedding.shape

# Adjust embeddings to the fixed sequence length

if seq_len < fixed_seq_len:

padding = np.zeros((fixed_seq_len - seq_len, embedding_dim), dtype=embedding.dtype)

embedding_fixed = np.concatenate([embedding, padding], axis=0)

elif seq_len > fixed_seq_len:

embedding_fixed = embedding[:fixed_seq_len, :]

else:

embedding_fixed = embedding

image_embeddings_list.append(embedding_fixed)

image_base64_list.append(image_base64)

# Stack embeddings

retrieved_image_embeddings = np.stack(image_embeddings_list)

# Adjust the query embedding

seq_len_q, embedding_dim_q = query_embedding_cpu.shape

if seq_len_q < fixed_seq_len:

padding = np.zeros((fixed_seq_len - seq_len_q, embedding_dim_q), dtype=query_embedding_cpu.dtype)

query_embedding_fixed = np.concatenate([query_embedding_cpu, padding], axis=0)

elif seq_len_q > fixed_seq_len:

query_embedding_fixed = query_embedding_cpu[:fixed_seq_len, :]

else:

query_embedding_fixed = query_embedding_cpu

# Convert embeddings to tensors

query_embedding_tensor = torch.from_numpy(query_embedding_fixed).to(model.device).unsqueeze(0)

retrieved_image_embeddings_tensor = torch.from_numpy(retrieved_image_embeddings).to(model.device)

# Compute similarity scores

with torch.no_grad():

scores = processor.score_multi_vector(query_embedding_tensor, retrieved_image_embeddings_tensor)

scores_np = scores.cpu().numpy().flatten()

# Free up memory

del query_embedding_tensor, retrieved_image_embeddings_tensor, scores

clear_cache()

# Combine images and scores

similarities = list(zip(image_base64_list, scores_np))

similarities.sort(key=lambda x: x[1], reverse=True)

if similarities:

# Display the most similar image

img_str, score = similarities[0]

img_data = base64.b64decode(img_str)

image = Image.open(io.BytesIO(img_data))

# Prepare API payload

payload = {

"model": "gpt-4o",

"messages": [

{"role": "user", "content": query},

{

"role": "assistant",

"content": (

f"Please answer the following question using only the information visible in the provided image.\n\n"

f"Do not use any of your own knowledge, training data, or external sources.\n\n"

f"Base your response solely on the content depicted within the image.\n\n"

f"If there is no relation with the question and the image, respond with: "

f"'Question is not related to image'.\n\n"

f"Here is the question: {query}"

),

"type": "image_url",

"image_url": {"url": f"data:image/jpeg;base64,{img_str}"}

}

]

}

# Fetch the GPT-4o response

response = requests.post("https://api.openai.com/v1/chat/completions", headers=headers, json=payload).json()

chat_response = response.get("choices")[0].get("message").get("content")

# Generate HTML for display

html_content = f"""

<div style="display: flex; align-items: center; justify-content: space-between;">

<div style="flex: 1; padding: 20px;">

<h3>AI Response:</h3>

<p style="font-size: 16px; line-height: 1.5;">{chat_response}</p>

</div>

<div style="flex: 1; text-align: center;">

<h3>Most Similar Image:</h3>

<img src="data:image/jpeg;base64,{img_str}" style="max-width: 100%; border: 1px solid #ddd; border-radius: 8px;"/>

<p><strong>Similarity Score:</strong> {score:.4f}</p>

</div>

</div>

"""

# Display the HTML

display(HTML(html_content))

else:

print("No similar images found. Try adding more images to the database.")

except Exception as e:

print(f"An error occurred: {str(e)}")Part 6: now it’s time to check the results

# add image to vector store

img_path = "/kaggle/working/downloaded_image.png"

add_file_to_index(img_path, processor, model)query = "can you explain how rag architecture works?"

get_response(query)here is the response:

The system retrieves relevant documents or images from the database and sends a query to GPT-4o for analysis. The AI response offers detailed information about how the rag process works

You can access the Code from here: Jupyter Notebook

if you have a query related to this or want to connect with me here are my profiles