The Magic Behind Self-Attention: Or How NLP Models Spy on Their Own Words!

Ah, self-attention in NLP — it’s like that one friend in a group chat who listens to everyone but mainly focuses on what they just said. You know, that friend who sends a meme and then watches how everyone reacts (and rates your LOLs on a scale from “meh” to “wow, they laughed”).

In this blog, we’re going to dive into self-attention in a funny, digestible way. Don’t worry if you’ve been terrified of attention mechanisms in the past — it’s like trying to understand how a cat thinks: complicated, but once you get it, it’s kinda cool.

What Even Is “Self-Attention”?

Imagine you’re at a party (because yes, NLP models party too). Every word in a sentence is trying to figure out what the other words are saying, while also deciding if they are the most important word in the room.

Self-attention helps words in a sentence focus on the other words, but most importantly, it also helps them look back at themselves (hence, “self” attention) to understand their role in the sentence. Words are narcissistic like I’m the hero of this world!

Here is a famous say from my side:

When words use self-attention:

Every word in the sentence: “I am the most important!”

How Does It Work? Let’s Break It Down with an Example

Let’s take a sentence like:

“The cat chased the mouse.”

In a world without self-attention, the word “chased” might just care about the next word, “the”. That’s fine for simple stuff, but what if it also wanted to know about “cat” and “mouse”? (Spoiler: it does).

Self-attention lets “chased” look at all the other words to figure out who’s doing the chasing and who’s being chased. So, instead of just looking next door, “chased” can glance at “cat”, “the”, and “mouse” — even itself — and determine which relationships matter the most.

Code Example: A Little Python Magic 🐍

Let’s hop into some basic code to illustrate how self-attention works.

import numpy as np

# New sentence

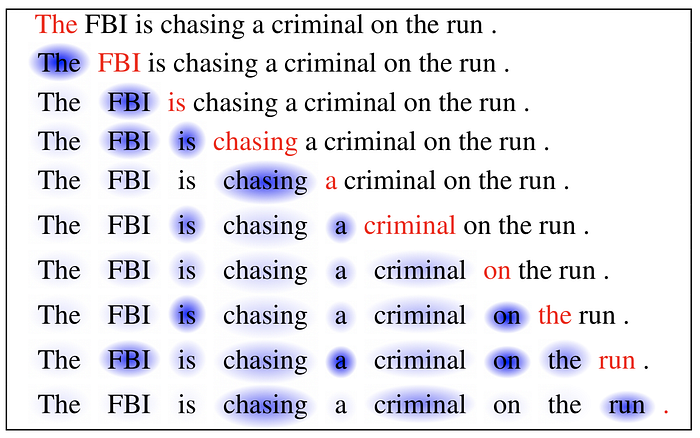

words = ["The", "FBI", "is", "chasing", "a", "criminal", "the", "run"]

# Random embedding vectors for each word (just for illustration!)

embeddings = {

"The": np.array([1.0, 0.2]),

"FBI": np.array([0.7, 0.9]),

"is": np.array([0.5, 0.3]),

"chasing": np.array([0.6, 1.0]),

"a": np.array([0.4, 0.2]),

"criminal": np.array([0.9, 0.8]),

"the": np.array([1.0, 0.1]),

"run": np.array([0.4, 0.5]),

}

# Function to calculate attention scores

def attention(query, key):

return np.dot(query, key) / (np.linalg.norm(query) * np.linalg.norm(key))

# Calculate attention between 'chasing' and every other word

chasing = embeddings["chasing"]

attention_scores = {word: attention(chasing, embeddings[word]) for word in words}

# Print out attention scores

print("Attention scores between 'chasing' and other words:")

for word, score in attention_scores.items():

print(f"Attention score between 'chasing' and '{word}': {score:.2f}")# Results from above code

Attention score between 'chasing' and 'The': 0.67

Attention score between 'chasing' and 'FBI': 0.99

Attention score between 'chasing' and 'is': 0.88

Attention score between 'chasing' and 'chasing': 1.00

Attention score between 'chasing' and 'a': 0.84

Attention score between 'chasing' and 'criminal': 0.95

Attention score between 'chasing' and 'the': 0.60

Attention score between 'chasing' and 'run': 0.99

What’s Happening in the Code?

- We’ve got a sentence represented as word embeddings (aka word vectors).

- We calculate how much attention each word gives to every other word using the dot product (because math is love, math is life).

- Words with stronger relationships with “chased” get a higher score. For example, “cat” (who’s doing the chasing) should get a higher score compared to “the” (which is just hanging around).

The Equation Behind It All

When you look at the actual self-attention equation for the first time:

Me: “I don’t need math to explain love… except for this.”

TL;DR of Self-Attention (In Case You Were Distracted by Memes)

- Self-attention lets each word in a sentence pay attention to every other word (and itself).

- It helps the model figure out important relationships between words in a sentence, like who’s chasing who.

- It’s at the core of models like Transformers, which are like the superheroes of NLP—they save the world from bad language predictions.

Next time you see self-attention, don’t panic. Just remember: it’s like every word going, “Hey, look at me!” 😎

Happy coding, and may your models pay attention to all the right things!